Helping assessments meet learning outcomes while working with AI

Description: Many theoretical units benefit from students programming the concepts taught in class as a learning aid. Translating these abstract theoretical concepts to practical implementations via a coding exercise reinforces understanding, often requiring extended abstract reasoning. However, code generation tools can make these coding task trivial, and removes this learning opportunity. The examples below make suggestions around ways to work with/around/ generative AI tools, but still retain the benefits of learning by coding.

Assessment types applicable: Programming exercises/ Software engineering

Unit Context: In computer vision laboratories, students are tasked with programming various algorithms or developing tools to process images.

Learning Objectives: These formative assessments typically aid in understanding theoretical algorithms/ techniques taught, the underlying properties of images, and give students the ability to turn the theory into practical artefacts solving real-world problems.

Which AI tools & why: Any (mainly ChatGPT, Github Copilot)

How is task & AI structured/scaffolded: We try to use three principles to facilitate the use of AI in these assessments.

1. Balancing learning opportunities where we think generative AI is useful vs when doing things from first principles may help.

In our assessments, we take an open approach to the use of generative AI, but do make recommendations (not requirements) about tasks within assessments where we feel students are better off not using this. For example, in a lab assessment on convolution, we recommended that students programmed this by hand to better understand the effects of padding and striding. We trusted that the students were mature enough to make the choice to learn, and for the most part found that they were. In a class of ~200 students, only 2 answers appeared to use the notation and style consistent with a generative AI solution.

2. Shifting to higher level reasoning/ increased complexity and understanding

Prior to generative AI, we had significantly more focus on lower level implementation. Given the advent of code generation, we can now extend lab complexity and aim for extended abstract learning outcomes. So we now add additional tasks requiring students to chain together prior low level programming tasks to solve a larger problem. Students get much closer to a finished product/ application (nice for real world translation) and get to spend more time on higher level reasoning about how components integrate together which is actually closer to what our learning outcomes aim to achieve.

An assignment to write code to make image panoramas from two or more photos.

3. Multi-stage questions requiring subjective decision-making based on multimodal input

This may not be an option for long, but generative AI systems are still limited by their ability to perform chained reasoning based on multi-modal input, and limitations around token length. We exploit this by structuring assessments using sequences of tasks that can be performed independently for some marks, but ultimately require students to reason about choices, based on the output of the previous tasks. For example, in a panorama photo stitching lab, we ask students to apply theory to improve the quality of the seam (strip where images are stitched). The choice of strategy they use here is determined by the output of previous tasks and requires students to inspect the artifacts in the seam to make an appropriate choice. Translating this into a suitable prompt requires the same reasoning that not using gen AI does, and so students meet our learning outcomes regardless of the approach they take. As task chains get longer, the token length limits of models are reached, and students relying on genAI need to become more concise in their code generation, requiring even more abstract thinking and explanation.

A panorama image generated at previous stages of the assessment introduces artefacts. Coming up with strategies to correct these requires extended abstract reasoning.

What support/guidance was needed for students:

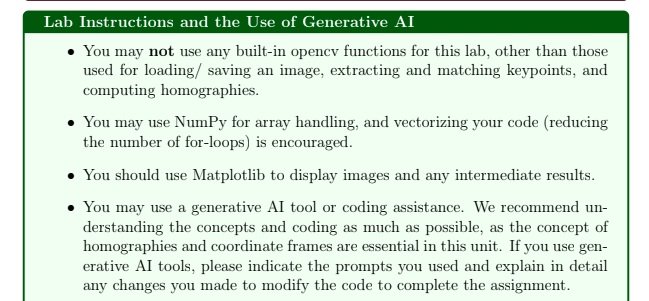

We provided specific instructions on which libraries and tools should be used (eg. pre-existing plotting and visualisation tools, tools for numerical calculations), which should be avoided (existing computer vision tools that already implement the concepts being learned) along with recommendations around the use of coding assistants (provide prompts used where necessary).

How were responsible use and ethical issues addressed? We ask students to document the prompts they used/ comment on their code to explain the steps they took. We ask them to interpret the program outputs and explain why these results were obtained.

2-3 things that worked well: Students appreciated the open approach to generative AI, and that the choice to do so was theirs. Students trusted our recommendations on where we think gen AI should rather not be used, and we did not need to enforce this.

2-3 things that need improvement: Relying on multimodal limits of gen AI when it comes to extended abstract reasoning may not be a long term strategy, alternative assessments will be required if this is improved and connected to code execution, enabling longer chain of thought.

Things to consider when adapting to your context: What are your learning outcomes around programming assessments? If your learning outcomes are to learn low level coding/ syntax, then this approach may not be for you.